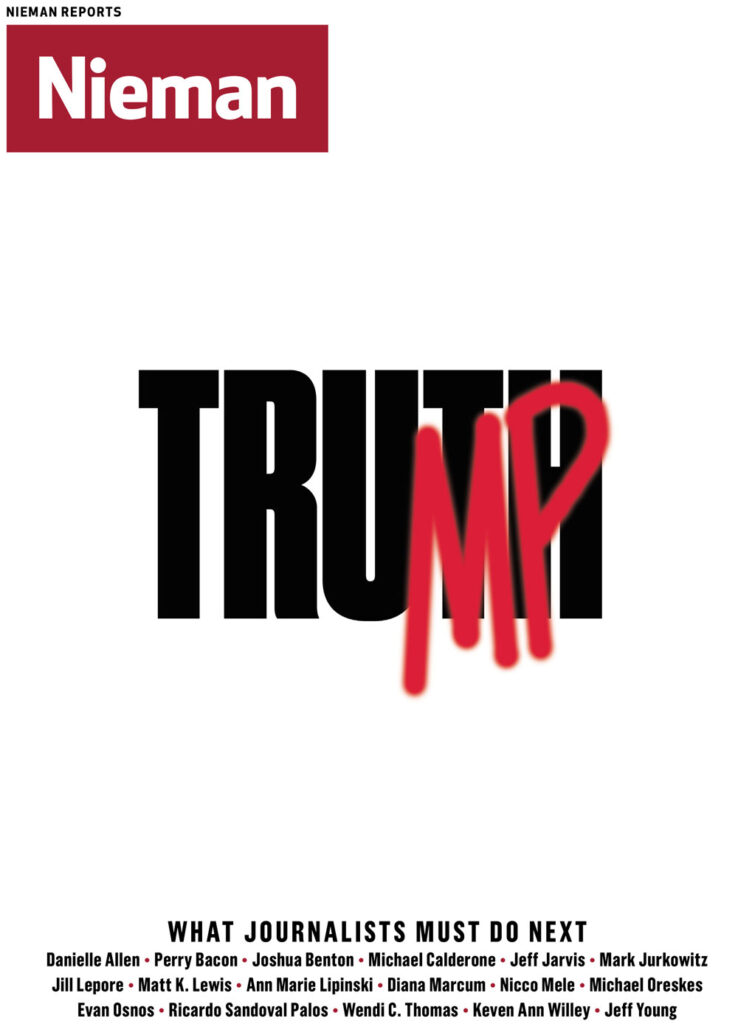

One way to think of the job journalism does is telling a community about itself, and on those terms the American media failed spectacularly this election cycle. That Donald Trump’s victory came as such a surprise—a systemic shock, really—to both journalists and so many who read or watch them is a marker of just how bad a job we did. American political discourse in 2016 seemed to be running on two self-contained, never-overlapping sets of information. It took the Venn diagram finally meeting at the ballot box to make it clear how separate the two solitudes really are.

The troubling morning-after realization is that the structures of today’s media ecosystem encourage that separation, and do so a little bit more each day. The decline of the mass media’s business models; the continued rise of personalized social feeds and the content that spreads easily within them; the hollowing-out of reporting jobs away from the coasts: These are, like the expansion of the universe, pushing us farther apart in all directions.

There’s plenty of blame to go around, but the list of actors has to start with Facebook. And for all its wonders—reaching nearly 2 billion people each month, driving more traffic and attention to news than anything else on earth—it’s also become a single point of failure for civic information. Our democracy has a lot of problems, but there are few things that could impact it for the better more than Facebook starting to care—really care—about the truthfulness of the news that its users share and take in.

Some of the fake news on Facebook is driven by ideology, but a lot is driven purely by the economic incentive structure Facebook has created: The fake stuff, when it connects with a Facebook user’s preconceived notions or sense of identity, spreads like wildfire. (And it’s a lot cheaper to make than real news.)

One example: I’m from a small town in south Louisiana. The day before the election, I looked at the Facebook page of the current mayor. Among the items he posted there in the final 48 hours of the campaign: Hillary Clinton Calling for Civil War If Trump Is Elected. Pope Francis Shocks World, Endorses Donald Trump for President. Barack Obama Admits He Was Born in Kenya. FBI Agent Who Was Suspected Of Leaking Hillary’s Corruption Is Dead.

These are not legit anti-Hillary stories. (There were plenty of those, to be sure, both on his page and in this election cycle.) These are imaginary, made up, frauds. And yet Facebook has built a platform for the active dispersal of these lies—in part because these lies travel really, really well. The pope’s “endorsement” has over 961,000 Facebook shares. The Snopes piece noting the story is fake has but 33,000.

In a column just before the election, The New York Times’s Jim Rutenberg argued that “the cure for fake journalism is an overwhelming dose of good journalism.” I wish that were true, but I think the evidence shows that it’s not. There was an enormous amount of good journalism done on Trump and this entire election cycle. For anyone who wanted to take it in, the pickings were rich.

The problem is that not enough people sought it out. And of those who did, not enough of them trusted it to inform their political decisions. And even for many of those, the good journalism was crowded out by the fragmentary glimpses of nonsense.

I used to be something of a skeptic when it came to claims of “filter bubbles”—the sort of epistemic closure that comes from only seeing material you agree with on social platforms. People tend to click links that align with their existing opinions, sure—but isn’t that just an online analog to the fact that our friends and family tend to share our opinions in the real world too?

But I’ve come to think that the rise of fake news—and of the cheap-to-run, ideologically driven aggregator sites that are only a few steps up from fake—has weaponized those filter bubbles. There were just too many people voting in this election because they were infuriated by made-up things they read online.

What can Facebook do to fix this problem? One idea would be to hire editors to manage what shows up in its Trending section—one major way misinformation gets spread. Facebook canned its Trending editors after it got pushback from conservatives; that was an act of cowardice, and since then, fake news stories have been algorithmically pushed out to millions with alarming frequency.

Another would be to hire a team of journalists and charge them with separating at least the worst of the fake news from the stream. Not the polemics (from either side) that sometimes twist facts like balloon animals—I’m talking about the outright fakery. Stories known to be false could be downweighted in Facebook’s algorithm, and users trying to share them could get a notice telling them that the story is fake. Sites that publish too much fraudulent material could be down weighted further or kicked out entirely.

Would this or other ideas raise thorny issues? Sure. This would be easy to screw up—which is I’m sure why Facebook threw up its hands at the pushback to a human-edited Trending section and why it positions itself a neutral connector of its users to content it thinks they will find pleasing. I don’t know what the right solution would be—but I know that getting Mark Zuckerberg to care about the problem is absolutely key to the health of our information ecosystem.