As medical reporters, we laugh at the tale about the drug-treatment researcher who said, “Thirty-three percent were cured, 33 percent died—and the third mouse got away.” We know that the more patients (or mice) in a study, the better. Big numbers help make a study’s findings “statistically significant.” This term simply means it’s unlikely that the study’s key statistical findings are due to chance alone. But merely obtaining statistical significance doesn’t prove that the study’s conclusions are medically significant or correct. So, as reporters, we must probe further and be alert for the numbers games and other things that might lead us awry.

Two journalistic instincts—healthy skepticism and good questioning— come in handy on the medical beat. And, if you don’t report in this area, a peek into what we do will make you a more astute consumer of medical news—and a more careful viewer of medical claims on the Internet.

Hints About Medical Coverage

What follows are thoughts I have about things that scientists and reporters must consider.

Remember the rooster who thought that his crowing made the sun rise? Even with impressive numbers, association doesn’t prove causation. A virus found in a patient’s body might be an innocent bystander, rather than the cause of the illness. A chemical in a town’s water supply might not have caused illnesses there, either. More study and laboratory work are necessary to certify cause-and-effect links.

Let me cite one current case in which precisely this caution is needed. News reports have speculated about whether some childhood immunizations might be triggering many cases of autism. As a reporter, this has the sound of coincidence, not causation. Autism tends to appear in children about the time they get a lot of their vaccines. Is additional study warranted? Probably. But there is concern that in the meantime parents will delay having children immunized against measles and other dangerous diseases. In a lot of the press reports, the missing figures are the tolls these childhood diseases took before vaccines were available.

Always take care in reporting claims of cures. The snake-oil salesman said, “You can suffer from the common cold for seven days, or take my drug and get well in one week.” Patients with some other illness might be improving simply because their disease has run its natural course, not because of the experimental drug they’re taking. Care is needed to sort claims made about what has cured a particular ailment.

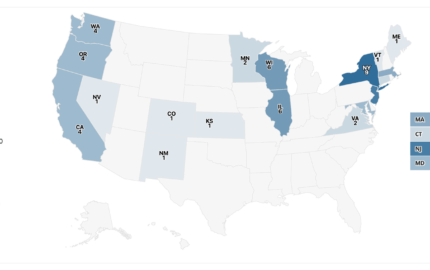

In covering stories about disease outbreaks and patterns, be cautious about case numbers. There was a story recently about how Lyme disease cases have soared in some states. The article cited statistics, but buried some important cautions. Improved diagnosis and reporting of Lyme cases might be behind much of this increase. The journalistic antidote: Refer to such numbers as reported cases and explain why you are doing so.

Sort through when you might be dealing with the power of suggestion. A large federal study examining quality of life issues recently concluded that hormone therapy for menopause doesn’t benefit women in many of the ways long taken for granted. How could so many women, for so long, have concluded that the hormone therapy made them more energetic and less depressed? A patient who wants and expects to see a drug work may mistakenly attribute all good feelings to the medication.

The “gold standard” of clinical research is a double-blind, placebo-controlled study, with patients randomly assigned to either a treatment group or to a comparison (no treatment) group. Blinding means that, until the study is completed, neither the researchers nor the patients know who is getting the experimental treatment and who is getting only a placebo. This keeps expectations and hopes from coloring reported results. Less rigorous studies still might be important, but findings from them require more questioning by journalists. When their findings are reported as news, the absence of the “gold standard” should be stated.

Side effects are a big part of medical coverage and need to be handled properly. Some drugs have been taken off the market after serious side effects were discovered, long after the original studies found no problems. A serious side effect that strikes only one in every 10,000 patients might have been missed in the original studies involving a few thousand patients. The problem becomes apparent only after the drug is marketed and then taken by more and more people.

There is a danger in citing averages. Remember: People drown in lakes with an average depth of four feet when it’s nine feet deep in the middle. We hear a claim that the average person in a weight-loss study lost 50 pounds. But maybe there were only three people in the study. A 400-pound man took off 150 pounds, but the other two patients couldn’t shed a single pound. Still interesting. But the average figure didn’t tell you the story.

When a reporter does a story about a new medical treatment, find out what it costs and whether the cost will be covered by most insurance plans. I’ve answered many phone calls from readers after reporting about some new medical treatment and forgetting to deal with the dollar figures. In reporting on research, cost estimates are important to our readers and viewers. Some treatments might be so expensive that they are unlikely ever to see widespread use.

Remind readers, listeners and viewers about the certainty of some uncertainty. Experts keep changing their minds about whether we should cut back on fats or carbs to keep our waistlines trim. In the eyes of some, these and other flip-flops give science a bad name. Actually, this is science working just as it is supposed to work, and it helps if we, as reporters, include this in our stories.

Readers, listeners and viewers should also know that science looks at the statistical probability of what’s true. Few, if any, new treatments would ever reach patients if proof-positive were required. Many, many lives would be lost. Science builds on old research findings in seeking new advances. In the process, old ideas are continually retested and modified if necessary.

The Wisdom of Good Medical Journalists

RELATED ARTICLE

"What makes a good medical reporter?"

- Council for the Advancement of Science WritingWise medical journalists tell their viewers and readers about the degree of uncertainty involved in what they are reporting. They use more words like “evidence indicates” and “concludes” and fewer words like “proof.” I only wish I had been wise more often during my career.

Keep in mind that when a study’s findings agree with other scientific studies and knowledge, that’s a big plus. When they don’t fit with what’s already known (or thought to be known), caution flags must be raised. The burden rests with those seeking to change medical dogma. But when that burden is met, there is a hell of a story to tell.

Big numbers aren’t always needed to tell important stories. Small studies can open big research areas. It’s just that reporting on these smaller studies require “early studies” warning labels. On the other hand, even the first few cases of a newly recognized disease (such as the mysterious respiratory illness called SARS) can be a concern. A single confirmed case of smallpox could be a looming disaster, signaling a new terrorist threat.

When we hear of a high number of cancer cases clustered in a neighborhood or town, more study might be needed, not panic spread. Statistically, there might be many more cases than expected. But wait. This could be happening by chance alone; with so many communities across our nation, a few will have more than their share of cancer cases. And with cancer, we hear about how experimental early-detection tests might find very tiny tumors. But is it early enough to make a difference? Or is treatment then the right approach? Extra caution, too, is needed in interpreting what treatment tests on lab animals tell us.

At this time, when so much medical information, scientific findings, and statistical claims readily accessible on the Internet, there is even more of an obligation on reporters to help consumers evaluate the source and consider possible bias. Reporters do this by always rigorously looking for the numbers and thinking about the points I’ve raised above about how figures can mislead.

Medical reporters don’t have to know scientific answers. Their job obligates them to ask the right questions. And it can be even easier than that. Frequently, I’ve ended an interview by asking, “What’s the question that I should have asked but didn’t?”

I’ve often been surprised by how much I then learned.

Lewis Cope was a science reporter for the Star Tribune (Minn.) for 29 years and is a former president of the National Association of Science Writers. He is coauthor, with the late Victor Cohn, of the second edition of “News & Numbers: A Guide to Reporting Statistical Claims and Controversies in Health and Other Fields” (Iowa State Press, 2001). He is a board member of the Council for the Advancement of Science Writing.

Two journalistic instincts—healthy skepticism and good questioning— come in handy on the medical beat. And, if you don’t report in this area, a peek into what we do will make you a more astute consumer of medical news—and a more careful viewer of medical claims on the Internet.

Hints About Medical Coverage

What follows are thoughts I have about things that scientists and reporters must consider.

Remember the rooster who thought that his crowing made the sun rise? Even with impressive numbers, association doesn’t prove causation. A virus found in a patient’s body might be an innocent bystander, rather than the cause of the illness. A chemical in a town’s water supply might not have caused illnesses there, either. More study and laboratory work are necessary to certify cause-and-effect links.

Let me cite one current case in which precisely this caution is needed. News reports have speculated about whether some childhood immunizations might be triggering many cases of autism. As a reporter, this has the sound of coincidence, not causation. Autism tends to appear in children about the time they get a lot of their vaccines. Is additional study warranted? Probably. But there is concern that in the meantime parents will delay having children immunized against measles and other dangerous diseases. In a lot of the press reports, the missing figures are the tolls these childhood diseases took before vaccines were available.

Always take care in reporting claims of cures. The snake-oil salesman said, “You can suffer from the common cold for seven days, or take my drug and get well in one week.” Patients with some other illness might be improving simply because their disease has run its natural course, not because of the experimental drug they’re taking. Care is needed to sort claims made about what has cured a particular ailment.

In covering stories about disease outbreaks and patterns, be cautious about case numbers. There was a story recently about how Lyme disease cases have soared in some states. The article cited statistics, but buried some important cautions. Improved diagnosis and reporting of Lyme cases might be behind much of this increase. The journalistic antidote: Refer to such numbers as reported cases and explain why you are doing so.

Sort through when you might be dealing with the power of suggestion. A large federal study examining quality of life issues recently concluded that hormone therapy for menopause doesn’t benefit women in many of the ways long taken for granted. How could so many women, for so long, have concluded that the hormone therapy made them more energetic and less depressed? A patient who wants and expects to see a drug work may mistakenly attribute all good feelings to the medication.

The “gold standard” of clinical research is a double-blind, placebo-controlled study, with patients randomly assigned to either a treatment group or to a comparison (no treatment) group. Blinding means that, until the study is completed, neither the researchers nor the patients know who is getting the experimental treatment and who is getting only a placebo. This keeps expectations and hopes from coloring reported results. Less rigorous studies still might be important, but findings from them require more questioning by journalists. When their findings are reported as news, the absence of the “gold standard” should be stated.

Side effects are a big part of medical coverage and need to be handled properly. Some drugs have been taken off the market after serious side effects were discovered, long after the original studies found no problems. A serious side effect that strikes only one in every 10,000 patients might have been missed in the original studies involving a few thousand patients. The problem becomes apparent only after the drug is marketed and then taken by more and more people.

There is a danger in citing averages. Remember: People drown in lakes with an average depth of four feet when it’s nine feet deep in the middle. We hear a claim that the average person in a weight-loss study lost 50 pounds. But maybe there were only three people in the study. A 400-pound man took off 150 pounds, but the other two patients couldn’t shed a single pound. Still interesting. But the average figure didn’t tell you the story.

When a reporter does a story about a new medical treatment, find out what it costs and whether the cost will be covered by most insurance plans. I’ve answered many phone calls from readers after reporting about some new medical treatment and forgetting to deal with the dollar figures. In reporting on research, cost estimates are important to our readers and viewers. Some treatments might be so expensive that they are unlikely ever to see widespread use.

Remind readers, listeners and viewers about the certainty of some uncertainty. Experts keep changing their minds about whether we should cut back on fats or carbs to keep our waistlines trim. In the eyes of some, these and other flip-flops give science a bad name. Actually, this is science working just as it is supposed to work, and it helps if we, as reporters, include this in our stories.

Readers, listeners and viewers should also know that science looks at the statistical probability of what’s true. Few, if any, new treatments would ever reach patients if proof-positive were required. Many, many lives would be lost. Science builds on old research findings in seeking new advances. In the process, old ideas are continually retested and modified if necessary.

The Wisdom of Good Medical Journalists

RELATED ARTICLE

"What makes a good medical reporter?"

- Council for the Advancement of Science WritingWise medical journalists tell their viewers and readers about the degree of uncertainty involved in what they are reporting. They use more words like “evidence indicates” and “concludes” and fewer words like “proof.” I only wish I had been wise more often during my career.

Keep in mind that when a study’s findings agree with other scientific studies and knowledge, that’s a big plus. When they don’t fit with what’s already known (or thought to be known), caution flags must be raised. The burden rests with those seeking to change medical dogma. But when that burden is met, there is a hell of a story to tell.

Big numbers aren’t always needed to tell important stories. Small studies can open big research areas. It’s just that reporting on these smaller studies require “early studies” warning labels. On the other hand, even the first few cases of a newly recognized disease (such as the mysterious respiratory illness called SARS) can be a concern. A single confirmed case of smallpox could be a looming disaster, signaling a new terrorist threat.

When we hear of a high number of cancer cases clustered in a neighborhood or town, more study might be needed, not panic spread. Statistically, there might be many more cases than expected. But wait. This could be happening by chance alone; with so many communities across our nation, a few will have more than their share of cancer cases. And with cancer, we hear about how experimental early-detection tests might find very tiny tumors. But is it early enough to make a difference? Or is treatment then the right approach? Extra caution, too, is needed in interpreting what treatment tests on lab animals tell us.

At this time, when so much medical information, scientific findings, and statistical claims readily accessible on the Internet, there is even more of an obligation on reporters to help consumers evaluate the source and consider possible bias. Reporters do this by always rigorously looking for the numbers and thinking about the points I’ve raised above about how figures can mislead.

Medical reporters don’t have to know scientific answers. Their job obligates them to ask the right questions. And it can be even easier than that. Frequently, I’ve ended an interview by asking, “What’s the question that I should have asked but didn’t?”

I’ve often been surprised by how much I then learned.

Lewis Cope was a science reporter for the Star Tribune (Minn.) for 29 years and is a former president of the National Association of Science Writers. He is coauthor, with the late Victor Cohn, of the second edition of “News & Numbers: A Guide to Reporting Statistical Claims and Controversies in Health and Other Fields” (Iowa State Press, 2001). He is a board member of the Council for the Advancement of Science Writing.