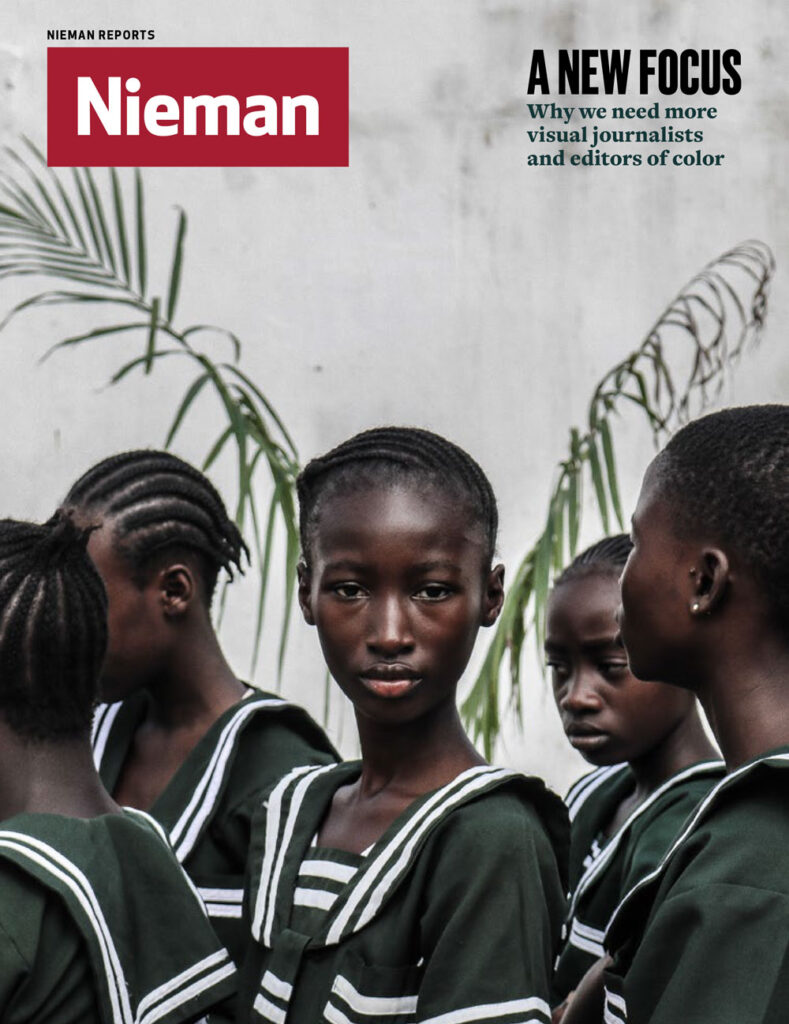

Zeynep Tufekci is a scholar of social movements and the technologies on which they rely. In “Twitter and Tear Gas: The Power and Fragility of Networked Protest,” published May 16 by Yale University Press, she draws on her observations at marches in Istanbul, Cairo, and New York to examine how the distribution of news on social media can have a profound effect on social movements. An excerpt:

Social media platforms increasingly use algorithms—complex software—to sift through content and decide what to surface, prioritize, and publicize and what to bury. These platforms create, upload, and share user-generated content from hundreds of millions, if not billions, of people, but most platforms do not and cannot show everything to everyone. Even Twitter, which used to show content chronologically—content posted last is seen first—is increasingly shifting to algorithmic control.

Perhaps the most important such algorithm for social movements is the one Facebook uses which sorts, prioritizes, and filters everyone’s “news feed” according to criteria the company decides. Google’s success is dependent on its page-ranking algorithm that distills a page of links from the billions of possible responses to a search query.

Algorithmic control of content can mean the difference between widespread visibility and burial of content. For social movements, an algorithm can be a strong tailwind or a substantial obstacle. Algorithms can also shape social movement tactics as a movement’s content producers adapt or transform their messages to be more algorithm friendly.

Consider how the Black Lives Matter movement, now nationwide in the United States, encountered significant algorithmic resistance on Facebook in its initial phase. After a police officer killed an African American teenager in Ferguson, Missouri, in August 2014, there were protests in the city that later sparked nationwide demonstrations against racial inequalities and the criminal justice system. However, along the way, this burgeoning movement was almost tripped up by Facebook’s algorithm.

The protests had started out small and local. The body of Michael Brown, the black teenager shot and killed by Ferguson police officer Darren Wilson on August 9, had been left in the street for hours. The city was already rife with tensions over race and policing methods. Residents were upset and grieving. There were rumors that Brown’s hands had been up in the air when he was shot.

When the local police in Ferguson showed up at the first vigils with an aggressive stance, accompanied by dogs, the outrage felt by residents spread more broadly and brought in people who might not have been following the issue on the first day. The Ferguson situation began to attract some media attention. There had been tornadoes in Missouri around that time that had drawn some national journalists to the state. As reports of the use of tear gas during nightly protests started pouring in, journalists went to Ferguson. Ferguson residents started live-streaming video as well, although at this point, the protests were mostly still a local news story.

On the evening of August 13, the police appeared on the streets of Ferguson in armored vehicles and wearing military gear, with snipers poised in position and pointing guns at the protesters. That is when I first noticed the news of Ferguson on Twitter—and was startled at such a massive overuse of police force in a suburban area in the United States. The pictures, essentially showing a military-grade force deployed in a small American town, were striking. The scene looked more like Bahrain or Egypt, and as the Ferguson tweets spread, my friends from those countries started joking that their police force might have been exported to the American Midwest.

Later that evening, as the streets of Ferguson grew tenser, and the police presence escalated even further, two journalists from prominent national outlets, The Washington Post and the The Huffington Post, were arrested while they were sitting at a McDonald’s and charging their phones. The situation was familiar to activists and journalists around the world because McDonald’s and Starbucks are where people go to charge their batteries and access Wi-Fi. The arrest of the reporters roused more indignation and focused the attention of many other journalists on Ferguson.

For social movements, an algorithm can be a strong tailwind or a substantial obstacle

On Twitter, among about a thousand people around the world that I follow, and which was still sorted chronologically at the time, the topic became dominant. Many people were wondering what was going on in Ferguson—even people from other countries were commenting. On Facebook’s algorithmically controlled news feed, however, it was as if nothing had happened. I wondered whether it was me: were my Facebook friends just not talking about it? I tried to override Facebook’s default options to get a straight chronological feed. Some of my friends were indeed talking about Ferguson protests, but the algorithm was not showing the story to me. It was difficult to assess fully, as Facebook keeps switching people back to an algorithmic feed, even if they choose a chronological one.

As I inquired more broadly, it appeared that Facebook’s algorithm—the opaque, proprietary formula that changes every week, and that can cause huge shifts in news traffic, making or breaking the success and promulgation of individual stories or even affecting whole media outlets—may have decided that the Ferguson stories were lower priority to show to many users than other, more algorithm-friendly ones. Instead of news of the Ferguson protests, my own Facebook’s news feed was dominated by the “Ice Bucket Challenge,” a worthy cause in which people poured buckets of cold water over their heads and, in some cases, donated to an amyotrophic lateral sclerosis (ALS) charity. Many other people were reporting a similar phenomenon.

There is no publicly available detailed and exact explanation about how the news feed determines which stories are shown high up on a user’s main Facebook page, and which ones are buried. If one searches for an explanation, the help pages do not provide any specifics beyond saying that the selection is “influenced” by a user’s connections and activity on Facebook, as well as the “number of comments and likes a post receives and what kind of a story it is.” What is left unsaid is that the decision maker is an algorithm, a computational model designed to optimize measurable results that Facebook chooses, like keeping people engaged with the site and, since Facebook is financed by ads, presumably keeping the site advertiser friendly.

Facebook’s decisions in the design of its algorithm have great power, especially because there is a tendency for users to stay within Facebook when they are reading the news, and they are often unaware that an algorithm is determining what they see. In one study, 62.5 percent of users had no idea that the algorithm controlling their feed existed, let alone how it worked. This study used a small sample in the United States, and the subjects were likely more educated about the internet than many other populations globally, so this probably underestimates the degree to which people worldwide are unaware of the algorithm and its influence. I asked a class of 20 bright and inquisitive students at the University of North Carolina, Chapel Hill, a flagship university where I teach, how they thought Facebook decided what to show them on top of their feed. Only two knew it was an algorithm. When their friends didn’t react to a post they made, they assumed that their friends were ignoring them, since Facebook does not let them know who did or didn’t see the post. When I travel around the world or converse with journalists or ethnographers who work on social media, we swap stories of how rare it is to find someone who understands that the order of posts on her or his Facebook feed has been chosen by Facebook. The news feed is a world with its own laws, and the out-of-sight deities who rule it are Facebook programmers and the company’s business model. Yet the effects are so complex and multilayered that it often cannot be said that the outcomes correspond exactly to what the software engineers intended.

Our knowledge of Facebook’s power mostly depends on research that Facebook explicitly allows to take place and on willingly released findings from its own experiments. It is thus only a partial, skewed picture. However, even that partial view attests how much influence the platform wields.

In a Facebook experiment published in Nature that was conducted on a whopping 61 million people, some randomly selected portion of this group received a neutral message to “go vote,” while others, also randomly selected, saw a slightly more social version of the encouragement: small thumbnail pictures of a few of their friends who reported having voted were shown within the “go vote” pop-up. The researchers measured that this slight tweak—completely within Facebook’s control and conducted without the consent or notification of any of the millions of Facebook users—caused about 340,000 additional people to turn out to vote in the 2010 U.S. congressional elections. (The true number may even be higher since the method of matching voter files to Facebook names only works for exact matches.) That significant effect—from a one-time, single tweak— is more than four times the number of votes that determined that Donald Trump would be the winner of the 2016 election for presidency in the United States.

Without Twitter’s reverse chronological stream, the news of unrest and protests might never have made it onto the national agenda

In another experiment, Facebook randomly selected whether users saw posts with slightly more upbeat words or more downbeat ones; the result was correspondingly slightly more upbeat or downbeat posts by those same users. Dubbed the “emotional contagion” study, this experiment sparked international interest in Facebook’s power to shape a user’s experience since it showed that even people’s moods could be affected by choices that Facebook made about what to show them, from whom, and how. Also, for many, it was a revelation that Facebook made such choices at all, once again revealing how the algorithm operates as a hidden shaper of the networked public sphere.

Facebook’s algorithm was not prioritizing posts about the Ice Bucket Challenge rather than Ferguson posts because of a nefarious plot by Facebook’s programmers or marketing department to bury the nascent social movement. It did not matter whether its programmers or even its managers were sympathetic to the movement. The algorithm they designed and whose priorities they set, combined with the signals they allowed users on the platform to send, created that result.

Facebook’s primary signal from its users is the infamous “Like” button. Users can click on “Like” on a story. “Like” clearly indicates a positive stance. The “Like” button is also embedded in millions of web pages globally, and the blue thumbs-up sign that goes with the “Like” button is Facebook’s symbol, prominently displayed at the entrance to the company’s headquarters at One Hacker Way, Menlo Park, California. But there is no “Dislike” button, and until 2016, there was no way to quickly indicate an emotion other than liking. The prominence of “Like” within Facebook obviously fits with the site’s positive and advertiser-friendly disposition.

But “Like” is not a neutral signal. How can one “like” a story about a teenager’s death and ongoing, grief-stricken protests? Understandably, many of my friends were not clicking on the “Like” button for stories about the Ferguson protests, which meant that the algorithm was not being told that this was an important story that my social network was quite interested in. But it is easy to give a thumbs-up to a charity drive that involved friends dumping ice water on their heads and screeching because of the shock in the hot August sun.

From press reporting on the topic and from Facebook’s own statements, we know that Facebook’s algorithm is also positively biased toward videos, mentions of people, and comments. The ALS Ice Bucket Challenge generated many self-made videos, comments, and urgings to others to take the challenge by tagging them with their Facebook handles. In contrast, Ferguson protest news was less easy to comment on. What is one supposed to say, especially given the initial lack of clarity about the facts of the case and the tense nature of the problem? No doubt many people chose to remain silent, sometimes despite intense interest in the topic.

The platforms’ algorithms often contain feedback loops: once a story is buried, even a little, by the algorithm, it becomes increasingly hidden. The fewer people see it in the first place because the algorithm is not showing it to them, the fewer are able to choose to share it further, or even to signal to the algorithm that it is an important story. This can cause the algorithm to bury the story even deeper in an algorithmic spiral of silence.

The power to shape experience (or perhaps elections) is not limited to Facebook. For example, rankings by Google—a near monopoly in searches around the world—are hugely consequential. A politician can be greatly helped or greatly hurt if Google chooses to highlight, say, a link to a corruption scandal on the first page of its results or hide it in later pages where very few people bother to click. A 2015 study suggested that slight changes to search rankings could shift the voting preferences of undecided voters.

Ferguson news managed to break through to national consciousness only because there was an alternative platform without algorithmic filtering and with sufficient reach. On the chronologically organized Twitter, the topic grew to dominate discussion, trending locally, nationally, and globally and catching the attention of journalists and broader publics. After three million tweets, the national news media started covering the story too, although not until well after the tweets had surged. At one point, before mass-media coverage began, a Ferguson live-stream video had about forty thousand viewers, about 10 percent of the nightly average on CNN at that hour. Meanwhile, two seemingly different editorial regimes, one algorithmic (Facebook) and one edited by humans (mass media), had simultaneously been less focused on the Ferguson story. It’s worth pondering if without Twitter’s reverse chronological stream, which allowed its users to amplify content as they choose, unmediated by an algorithmic gatekeeper, the news of unrest and protests might never have made it onto the national agenda.

The proprietary, opaque, and personalized nature of algorithmic control on the web also makes it difficult even to understand what drives visibility on platforms, what is seen by how many people, and how and why they see it. Broadcast television can be monitored by anyone to see what is being covered and what is not, but the individualized algorithmic feed or search results are visible only to their individual users. This creates a double challenge: if the content a social movement is trying to disseminate is not being shared widely, the creators do not know whether the algorithm is burying it, or whether their message is simply not resonating.

If the nightly television news does not cover a protest, the lack of coverage is evident for all to see and even to contest. In Turkey, during the Gezi Park protests, lack of coverage on broadcast television networks led to protests: people marched to the doors of the television stations and demanded that the news show the then-widespread protests. However, there is no transparency in algorithmic filtering: how is one to know whether Facebook is showing Ferguson news to everyone else but him or her, whether there is just no interest in the topic, or whether it is the algorithmic feedback cycle that is depressing the updates in favor of a more algorithm-friendly topic, like the ALS charity campaign?

Sometimes, the gatekeepers of the networked public sphere are even more centralized and even more powerful than those of the mass media

Algorithmic filtering can produce complex effects. It can result in more polarization and at the same time deepen the filter bubble. The bias toward “Like” on Facebook promotes the echo-chamber effect, making it more likely that one sees posts one already agrees with. Of course, this builds upon the pre-existing human tendency to gravitate toward topics and positions one already agrees with—confirmation bias—which is well demonstrated in social science research. Facebook’s own studies show that the algorithm contributes to this bias by making the feed somewhat more tilted toward one’s existing views, reinforcing the echo chamber.

Another type of bias is “comment” bias, which can promote visibility for the occasional quarrels that have garnered many comments. But how widespread are these problems, and what are their effects? It is hard to study any of this directly because the data are owned by Facebook—or, in the case of search, Google. These are giant corporations that control and make money from the user experience, and yet the impact of that experience is not accessible to study by independent researchers.

Social movement activists are greatly attuned to this issue. I often hear of potential tweaks to the algorithm of major platforms from activists who are constantly trying to reverse-engineer them and understand how to get past them. They are among the first people to notice slight changes. Groups like Upworthy have emerged to produce political content designed to be Facebook algorithm friendly and to go viral. However, this is not a neutral game. Just as attracting mass-media attention through stunts came with political costs, playing to the algorithm comes with political costs as well. Upworthy, for example, has ended up producing many feel-good stories, since those are easy to “Like,” and thus please Facebook’s algorithm. Would the incentives to appease the algorithm make social movements gear towards feel-good content (that gets “Likes”) along with quarrelsome, extreme claims (which tend to generate comments?)—and even if some groups held back, would the ones that played better to the algorithm dominate the conversation? Also, this makes movements vulnerable in new ways. When Facebook tweaked its algorithm to punish sites that strove for this particular kind of virality, Upworthy’s traffic suddenly fell by half. The game never ends; new models of virality pop up quickly, sometimes rewarded and other times discouraged by the central platform according to its own priorities.

The two years after the Ferguson story saw many updates to Facebook’s algorithm, and a few appeared to be direct attempts to counter the biases that had surfaced about Ferguson news. The algorithm started taking into account the amount of time a user spent hovering over a news story—not necessarily clicking on it, but looking at it and perhaps pondering it in an attempt to catch an important story one might not like or comment on— and, as previously noted, programmers implemented a set of somewhat harder-to-reach but potentially available Facebook reactions ranging from “sad” to “angry” to “wow.” The “Like” button, however, remains preeminent, and so does its oversized role in determining what spreads or disappears on Facebook.

In May 2016, during a different controversy about potential bias on Facebook, a document first leaked to The Guardian and then released by Facebook showed a comparison of “trends” during August 2014. In an indirect confirmation of how the Ferguson story was shadowed by the ALS Ice Bucket Challenge, the internal Facebook document showed that the ALS ice-bucket challenge had overwhelmed the news feed, and that posts about Ferguson had trailed.

Increasingly, pressured by Wall Street and advertisers, more and more platforms, including Twitter, are moving toward algorithmic filtering and gatekeeping. On Twitter, an algorithmically curated presentation of “the best tweets first” is now the default, and switching to a chronological presentation requires navigating to the settings menu. Algorithmic governance, it appears, is the future and the new overlords that social movements must grapple with.

The networked public sphere is not a flat, open space with no barriers and no structures. Sometimes, the gatekeepers of the networked public sphere are even more centralized and sometimes even more powerful than those of the mass media, although their gatekeeping does not function in the same way. Facebook and Google are perhaps historically unprecedented in their reach and their power, affecting what billions of people see on six continents (perhaps seven; I have had friends contact me on social media from Antarctica). As private companies headquartered in the United States, these platforms are within their legal rights to block content as they see fit. They can unilaterally choose their naming policies, allowing people to use pseudonyms or not. Their computational processes filter and prioritize content, with significant consequences.

This means a world in which social movements can potentially reach hundreds of millions of people after a few clicks without having to garner the resources to challenge or even own mass media, but it also means that their significant and important stories can be silenced by a terms-of-service complaint or by an algorithm. It is a new world for both media and social movements.

Excerpted from “Twitter and Tear Gas: The Power and Fragility of Networked Protest” by Zeynep Tufekci, published by Yale University Press 2017. Used with permission. All rights reserved.