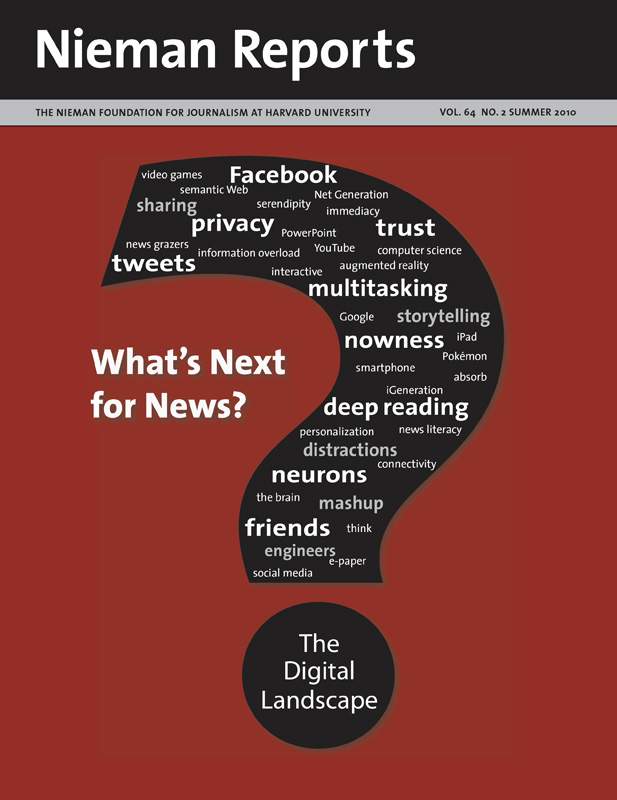

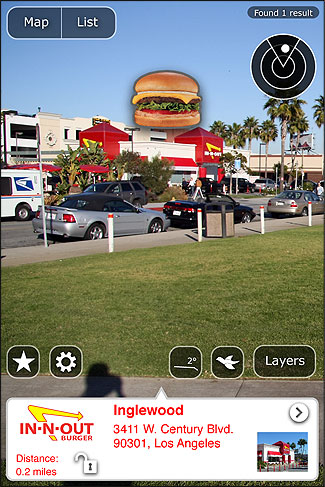

Using an augmented reality browser, a person can see different layers of information overlaid on the live view from their smartphone’s camera. Photo courtesy of Layar.

Imagine that you are able to see invisible information draped across the physical world. As you walk around, you see labels telling the history of a place and offering geographic attributes of the space as you walk through. There’s the name of a tree, the temperature, the age of a building, and the conduits under the pavement. Popping into view are operating instructions for devices. All of this is digitally overlaid and visible across the physical world.

This yet-to-be-realized experience is a thought experiment for helping us interact with a future that is moving inexorably toward us.

Web browsers enable us to view hyperlinked media on a page. Now the Web browser is our camera view as we interact with digital-linked media that are attached to the real world. We can experience this now through mobile technology’s augmented reality (AR) on a smartphone using apps like Layar, Wikitude and Junaio. Eventually we’ll be able to do so with augmented reality glasses. In time, we’ll do this through our vehicle’s windows, too, then with digitally augmented contact lenses, and on go the possibilities. Right now data are geocoded so the information is identified with latitude and longitude, though for many future AR applications, it will be necessary to include data about a digital object’s elevation.

The first generation of smartphone augmented-reality viewers can see only a few narrow glimpses of the increasing mass of geocoded data and media available nearby. Yet, there’s a growing flood of location-based map data, geocoded Web pages, and live sensor data digitally available using newly opened standards like Keyhole Markup Language (KML); this map description language is used by Google Maps and Google Earth and named after Keyhole, the company that developed Keyhole Earth Viewer, which was renamed Google Earth when the company was acquired by Google. According to Google, people have already created and posted more than two billion place marks—digital objects attached to a place—and a few hundred million more using another Web standard called geoRSS. This location coding is an extension of RSS (Real Simple Syndication), which is widely used by news organizations. And now Twitter’s new geocoding tools are being used to add location coordinates to millions of tweets.

The beauty of these standard codes is that ideally they can be viewed on any browser, just as an HTML Web page is now easily read on commonly used browsers such as Firefox, Internet Explorer, Safari or Opera. Similarly, KML and geoRSS location data can be viewed on any map program from Google, Microsoft, Yahoo!, or companies such as ESRI, which is the dominant map software company.

Unfortunately our GPS-equipped phones can’t calculate location accurately enough to precisely display geocoded information that is closer than about five to 20 meters. This is a technical limitation of both GPS and of the data. Nor does most geocoded information yet include precise 3D coordinates of latitude, longitude and elevation. Instead, with this first generation of mobile devices, most of the apps rely on the GPS and an internal compass to show viewers the general, but not the precise location of the data. This works fine for finding a coffee shop, but is insufficient for learning the finer details of an object or place we can see, for example information about an ornamental decoration on a historic building.

There are, however, some technical developments that will enable the creation and viewing of digital objects with precision in 3D space. These will rely on a photographic recognition capability, which major technology companies such as Nokia, Microsoft, Google, and others are developing. How will they work? By comparing the pattern in the view to a stored pattern. By using the company’s vast network of computers and massive database of images, Google’s visual search application can identify an image of a place like the Golden Gate Bridge; eventually this technology will be able to calculate the “pose” of a camera, i.e., the 3D location, with the field of view and precise distance of the person from the object he is viewing. Nokia’s Point and Find and Microsoft’s Photosynth work similarly and will provide the capability for people to add a precisely located annotation to the real world so that people can discover digital information attached to these physical places.

Most of the apps on the first generation of smartphones rely on GPS and an internal compass to provide general information about a place. Photo courtesy of Layar.

Augmented Reality and Journalism

Until now our knowledge of the physical world, as humans, has been limited to that which we can carry around with us to help make sense of the physical world. With the Internet and now with relatively inexpensive wireless mobile devices, our ability to make sense of the world as we move through it is greatly enhanced. All we need to do is to enter a query and view the information on a digital device. But right now to see this information in the real world requires that we launch one of dozens of special iPhone or Google Android phone applications. By 2015, the Institute for the Future forecasts that digital augmentation will work seamlessly and naturally through the first generation of special eyeglasses equipped to show digital data overlaid on the real world. By 2020, perhaps glasses won’t be needed as wireless contact lenses, already in development in University of Washington labs and elsewhere, become available.

Just as the hypertext Web changed our interaction with text, emerging augmented reality technologies will reshape how we understand and behave in the physical world. That’s why it’s important that we—in particular, those who transmit information and interpret and describe the world for others, as journalists do—start to think about the implications of physical space being transformed into information space.

I think it’s apparent that we won’t want to see everything about all that we are viewing. If we did, our natural vision would be blocked completely. This means we’ll need to think hard about developing ways to query the information we want and filter from our view potentially vast amounts of information that we don’t want. In any place we go, there will exist the potential for a bombardment of information that has been aggregated about the thousands of digital objects that describe in some way our physical environment—its infrastructure, history, culture, commerce and politics. In the mix will be social and personal information and sometimes even fictional events with this place as a backdrop.

With access to this realm of information, complex objects will become self-explanatory. People will be able to display operating and maintenance instructions in text, graphics or even in geolocated video and sound. And just as it will be very easy to encode and view facts about a place, it will be similarly simple to overlay the place with fictional art and media. And when this happens, even neutral spaces can be transformed into sensory rich entertainment experiences.

As travelers in information, journalists will have the job of making sense of this new world and they will do this with a fresh palette of digital tools. It will be incumbent on them to find ways to tell stories about our new blended realities, using tools that make possible these new dimensions. Imagine the possibilities of being able to describe in detail the cultural and social histories of a place that foreshadowed a newsworthy event—and do this in augmented reality so people can visualize all of this.

There is also the opportunity to overlay statistical probabilities of an event occurring. For example, if a driver looking for a parking space can see that there have been many auto thefts nearby, he might decide to park somewhere else. If people could discover that there is a very high incidence of communicable diseases near a restaurant, they might choose to eat elsewhere.

News organizations and start-up entrepreneurs are only beginning to explore the potential of augmented reality. Map out where news stories occur at a moment in time and you surely will find stories from Washington, D.C. and Baghdad, others from Afghanistan, and a mixture of local coverage. However, if we gather stories related to a place over a longer period of time, there is a higher density of news present in that place. And so both the temporal and spatial density of information in any one place can become a more complete and richer resource.

Recently Microsoft demonstrated how we’ll be able to see old photos and videos of an earlier time overlaid directly and precisely on a rendering of the current environment. This will enable us to understand better (by seeing) what a place was like at another time. Indeed, it’s likely that we’ll be able to see from recordings video ghosts of people walking down the street and listen to them describe experiences from another time in this exact place.

This futuristic vision of the digital augmentation of our real world seems quite strange, even disorienting, to our contemporary sensibilities. In its entirety, it is not yet imminent. We have ample time to explore the implications, challenges, dilemmas and opportunities that it poses.

At the Institute for the Future, we help people to systematically think about the future by following a basic methodology of planning for disruptive changes ahead; the process involves three stages—foresight to insight to action. Foresight happens by gathering and synthesizing expert opinions; one expert might be able to describe a clear view, but one limited by her own realm of expertise. By gathering views from multiple experts, we start to see things at the intersection of various views. Then by developing these vivid views of the possible future, we arrive at what we can grasp as the probable future. The next step is to select actions to build what is seen as a desirable future that is based on contextual insights.

Through this process, one overarching theme remains at the center of our thinking. We are not victims of the future; we can shape our own futures. The takeaway for journalists is that the tools and probable use of augmented reality are not the stuff of science fiction. To those who find ways to use this technology to tell stories—to gather and distribute news—will flow the audience.

Mike Liebhold is a senior researcher and distinguished fellow at the Institute for the Future, an independent nonprofit research group in Palo Alto, California.