When a Twitter user named @Guccifer_2 direct messaged reporter Sheera Frenkel in June 2016, offering hacked emails from the Democratic National Committee, she demonstrated an instinct that will be crucial for journalists covering the election this fall.

“Can you explain where these emails came from?” she recalls asking over and over. “I want to understand how you got them.”

Frenkel, a relatively new cybersecurity reporter for BuzzFeed who has since joined The New York Times, never received a satisfactory response. So she spent the next five months digging for answers. On October 15, amid a flurry of coverage detailing salacious details of internal Democratic emails, she published a blockbuster piece looking at the larger context — which turned out to be a crucial issue for America’s election security.

“Meet Fancy Bear, The Russian Group Hacking the U.S. Election,” the headline trumpeted. Guccifer 2.0, it turned out, was a fake persona created by a Russian military intelligence agency to disseminate hacked emails and shape the narratives around the election.

Even as media organizations have gradually gotten more up to speed on how to watch out for trolls and debunk misinformation, there’s an unresolved dilemma: how to responsibly handle hack-and-leak operations like the one Frenkel uncovered, which involve authentic, newsworthy documents but which are put forward at a time and in a manner that serves the agenda of bad actors. Finding the right balance between covering the context as well as the contents of the documents can be tricky. With no major operation targeting U.S. politics since 2016, it’s unclear how well American journalists would handle such a dump this time around.

Should journalists cover newsworthy leaks differently — or even at all — if the documents were brought to light by an information operation?

“Now there’s much more focus on developing those resources for training journalists to understand the role they play in state operations,” says Renée DiResta, research manager at the Stanford Internet Observatory and co-author of its 2019 report analyzing Russia’s manipulation of U.S. media, “Potemkin Pages & Personas.” “At the same time though, we’re all waiting to see what happens when the next big trove of hacked docs comes out.”

Amid a pandemic, the Black Lives Matter protests, America’s greatest social upheaval since 1968, and the threat of interference in a high-stakes election this fall, the atmosphere is particularly ripe for exploitation. Fear, anger, and intensifying partisan divisions, especially on issues like race and election security, make people more susceptible to believing misleading information — and amplifying it or even acting on it.

Meanwhile, the media industry, already grappling with declining public trust, aggressive attacks on its integrity, and systemic financial woes that have shuttered or strained many local news outlets, is now confronting an additional vulnerability: the proliferation of disinformation that targets journalists in a bid to weaponize their megaphones or drown them out, undermining the credibility and clout of the free press.

As experts and lawmakers have unraveled the web of deception Moscow spun around the 2016 election and continues to spin around this year’s vote, they have revealed a wide range of techniques Russia and others have used to exploit the credibility of journalists in the U.S. and other democracies. These included creating trolls, whose social media posts were quoted in dozens of news outlets and erroneously held up as authentic American voices; fabricating freelance “journalists” who seeded Russian narratives into a variety of publications worldwide in a bid to launder those narratives and give them greater credibility; offering journalists hacked emails from the World Anti-Doping Agency in a bid to frame what the hackers called “Anglo-Saxon nations” as power-hungry competitors using clean sport as a pretext for banning Russia from the 2018 Olympic Games; and leaking documents on everything from U.S. politics to secret U.K.-U.S. trade talks, resulting in a flurry of coverage about Russia’s chosen topics on the eve of its adversaries’ national elections.

The documents from the U.K.-U.S. trade talks, which surfaced in the run-up to Britain’s December 2019 election, were leaked by a long-running Russian information campaign called Secondary Infektion — a reference to a Cold War disinformation campaign run by the KGB that aimed to spread the belief that the U.S. government had purposely created HIV/AIDS — whose reach was little understood until very recently. In a June report, top social media analysis firm Graphika revealed for the first time the extent of the operation, which published more than 2,500 pieces of content across 300 platforms from 2014 to 2020, much of it designed to exacerbate tensions between the U.S. and its allies. The operation relied heavily on forged documents, impersonating everyone from prominent U.S. senators to the Committee to Protect Journalists.

To be sure, the CIA has meddled in many countries’ domestic politics, from orchestrating the 1953 coup d’état that ousted Iran’s democratically elected prime minister to planting stories in the Nicaraguan press that undermined the Sandinista government leading up to the 1990 election, paving the way for the opposition’s victory. Scholars and former agents argue, however, that interventions designed to support democratic opposition groups in their fight against authoritarianism are not morally equivalent to the foreign meddling of Russian President Vladimir Putin, who has sought to boost Russia’s power by undermining his opponents at home and abroad — and just secured the public’s support to stay in office until 2036.

Some have criticized journalists for being too quick suspect a Russian boogeymen is lurking behind every tweet, thereby exaggerating the influence of foreign disinformation campaigns and absolving elected officials, journalists, and voters of responsibility for the course of American politics. But the point is not that Russia has swept in and begun playing Americans like so many marionettes against their better judgment. Rather, their meddling has cast a fog over American politics and raised doubts about whether the democratic system can be trusted to work properly. Seen in this light, journalists’ suspicions are proof of the efficacy of Russia’s strategy, which is now yielding dividends far beyond the initial investment.

Russia is by no means the sole perpetrator of disinformation campaigns against Western democracies, however; China and Iran are also high-profile purveyors of disinformation targeting U.S. politics, and domestic actors and scammers can play a role as well.

Disinformation that targets journalists in a bid to weaponize their megaphones or drown them out has proliferated

In 2017, for example, white supremacists and others impersonating Antifa on social media sought to discredit the loosely affiliated far-left organization and bait journalists into thinking the accounts were authentic, an effort highlighted in a September 2019 Data & Society report co-authored by Joan Donovan of Harvard. The following year, anonymous social media users seeded the viral anti-immigrant slogan “Jobs Not Mobs” into mainstream U.S. media and the political rhetoric ahead of the mid-term elections.

The largest Black Lives Matter page on Facebook, with fundraisers, merchandise for sale, and some 700,000 followers, turned out to be run by a white man from Australia – a case study highlighted by the European Journalism Centre’s new Verification Handbook that provides specific guidance on dealing with disinformation and media manipulation.

And this spring, both Covid-19 and the death of George Floyd while pinned under the knee of a white police officer have sparked a fury of misinformation and disinformation over everything from how the pandemic started to who’s behind the looting that accompanied some nationwide protests over racial injustice.

On June 11, Twitter took down 23,750 accounts involved in a Chinese disinformation campaign, which, among other things, promoted Beijing’s response to the pandemic. It also took down an additional 150,000 “amplifier” accounts that had been used to spread the campaign’s reach by liking and retweeting content. While such efforts do not necessarily target journalists directly, they cloud the media environment and can create powerful countercurrents to fact-based reporting.

“I increasingly feel that for journalists, the Internet is a hostile environment,” says Claire Wardle, co-founder and director of First Draft, who likens the nonprofit’s workshops on countering misinformation and disinformation to a new kind of hostile environment training for navigating the perils of this new digital landscape. “Journalists don’t understand that they are being deliberately manipulated.”

That said, Wardle sees a growing awareness of the threat over the past two years. Frenkel echoes that point: “I think journalists are so much more aware of the role we play in amplifying disinformation.” Also, social media platforms’ increasing transparency in sharing data on manipulation campaigns has helped tremendously, she adds. “It’s night and day, the amount of information we’re able to get from Facebook” compared to 2016, says Frenkel.

In addition, new or newly expanded initiatives have sprung up to help journalists deal with disinformation. The Stanford Internet Observatory and First Draft launched attribution.news, an online resource that includes case studies and best practices for journalists covering cyber incidents and disinformation. It includes tips such as specifying the confidence level of government agencies, experts, and cybersecurity firms in attributing an attack or influence operation; and asking others to challenge one’s research and conclusions in order to discover gaps or biases.

Boston-based cybersecurity firm Cybereason has run seven simulations of Election Day mayhem designed to stress-test the preparedness level of various actors, including journalists. Participants have had to contend with hacked municipal social media accounts, deep fake videos of public officials “saying” things they never said, and disinformation designed to suppress the vote, such as fake reports of ICE agents targeting undocumented residents or police frisking black men on their way to vote.

Israel Barak, Cybereason’s chief information security officer and an expert in cyber warfare, says two key lessons for journalists are establishing validated channels for corroborating information rather than relying on social media, even official accounts, and also guarding against a failure of imagination. “You need to educate yourself through a simulation or other means in the art of the possible,” he says. “Not only what happened in the past, but what is possible.”

First Draft, which has a wealth of information and video seminars on its own website, has organized 15 live simulations across the U.S. that have given hundreds of journalists hands-on experience with disinformation campaigns, followed by master classes on how to respond.

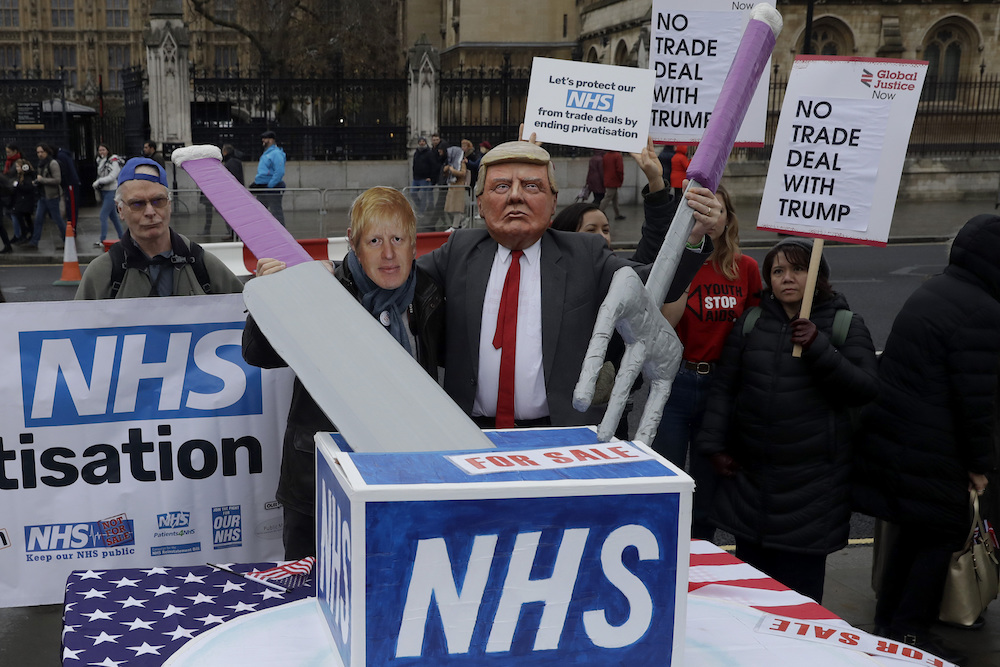

Just weeks before Britain’s election last December, which would determine whether Prime Minister Boris Johnson could follow through with his Brexit plan the following month, the Labour opposition called a press conference to present 451 pages of unredacted documents detailing U.K.-U.S. trade talks, which they said revealed that Johnson’s Tory government had discussed selling the state-run National Health Service. Given that the trade talks were one of the biggest issues in the upcoming election, the documents — which appeared to be real — had genuine news value. But the Labour Party wouldn’t identify the source that had provided the documents to them.

So Jack Stubbs, Reuters’ European cybersecurity correspondent, teamed up with Ben Nimmo, director of investigations for Graphika, to figure out the source of the leak. Neither of them slept much for a week, mapping out how the documents had originally been disseminated online. They knew that the documents had first been posted to Reddit by a user named Gregoriator, who had also tweeted the Reddit post directly to journalists and U.K. politicians. Stubbs and Nimmo concluded that the operation mirrored tactics and techniques previously used by Secondary Infektion. Stubbs revealed their findings in a Dec. 2 article, citing support from Nimmo as well as researchers at the Atlantic Council, Oxford, and Cardiff University.

Within the week, Reddit said it had banned 61 accounts, including Gregoriator, and confirmed that the U.K.-U.S. trade leaks were tied to Secondary Infektion.

People on Twitter argued that it didn’t matter where the documents came from if they were real. But Stubbs disagreed. “Two things can be true — that the documents are genuine and have news value, and that the way that they were first distributed online implies that an outside actor was acting in bad faith,” he says. “Both are newsworthy.”

This raises a crucial question: Should journalists cover newsworthy leaks differently — or even at all — if the documents were brought to light by an information operation?

“In the 21st century, we at all times need to be prepared for information warfare,” says Jack Shafer, a media and politics columnist for Politico. “We can do one of two things — we can confront it head on or we can attempt to ignore it. I submit that ignoring information, even state-sponsored misinformation, is a dereliction of journalistic duty.”

While the agenda of the leaker matters, he says, it should also be weighed against the news value of the leak. “When the information is demonstrably true — a genuine document — then the motive of the leaker may not be the paramount story,” argues Shafer.

After all, even if the intent was malign, governments have historically revealed true information about adversaries. Heidi Tworek, an expert on the international history of communications technology at the University of British Columbia, offers a classic example from the Cold War when East Germany compiled and released the names of ex-Nazis working for the West German government. Although the intent was to undermine West Germany, and the West German government condemned these lists as Communist propaganda, historical investigation revealed that the lists were largely correct.

So should journalists of that day not published the lists, or focused more on why East Germany was releasing the lists than who was on them?

“The example shows that journalists have had to wrestle with such questions for a long time,” says Tworek.

Tworek argues that it’s important for journalists to recognize the motive for putting out such documents in the first place, or else they could end up “unintentionally doing the bidding of somebody else for geopolitical purposes.”

While the agenda of the leaker matters, it should also be weighed against the news value of the leak

To some degree, every leaker has an agenda, acknowledges Bret Schafer, media and digital disinformation fellow at the German Marshall Fund’s Alliance for Securing Democracy in Washington, D.C., which Tworek is also affiliated with. But when the agenda is to inflict damage on a country or on a political candidate for the benefit of a foreign adversary, he adds, the focus should be less on the juicy details buried in the leak and more on questions like: Why do we have it? Why do we have it now? Who could have gotten this and who could have orchestrated the type of leak that happened?

Most of the 2016 coverage of the hacks of the DNC and Clinton campaign chairman John Podesta did the reverse, focusing disproportionately on the contents of stolen emails, rather than digging into who would have had the ability and motivation to pull off the operation — and whose interests it would serve to have those issues covered in the American media.

“There were mainstream front-page articles about the Podesta leaks throughout the last month before the election … That was nearly the entire journalistic community taking the bait,” says Nimmo, a pioneer of investigations into online disinformation campaigns who previously worked as a reporter for Deutsche Presse-Agentur and covered Russian-stoked unrest in Estonia in 2007. “I think they were completely played.”

Robert Mueller’s investigation into Russian meddling in the 2016 election concluded that it was done with the express purpose of sowing discord and dissension among the American people, and weakening their faith in their democratic institutions.

To that end, Moscow exploited one of the institutions most vital to democracy: a free press.

Its approach included two main prongs: creating fake personas to deepen divisions via social media, and hacking sensitive information and then leaking it to the press.

The Internet Research Agency (IRA), a Kremlin-linked outfit in St. Petersburg, reached 126 million people through 470 Facebook accounts and 1.4 million through 3,814 Twitter accounts, according to representatives of the U.S.-based social media companies. A significant number of those tweets tagged journalists. And it worked.

One Russian troll who claimed to be an African-American political science major in New York, @wokeluisa (Luisa Haynes), garnered more than 50,000 followers and was featured in more than two dozen news stories, including in the BBC, Time, and Wired.

And a University of Wisconsin-Madison study of 33 outlets from Fox News to The Washington Post found that all but one of them quoted accounts now known to be Russian trolls.

The other prong of Russia’s strategy — hacking sensitive information and leaking it via personas like @Guccifer_2 and DC Leaks — was orchestrated by the Russian military intelligence agency known as the GRU and had little success on social media, according to the 2019 report, “Potemkin Pages & Personas.” The GRU’s most popular account got only 47.9 likes on average, and garnered less than 7,500 engagements. Yet the report concludes that of all the ways Russia interfered in the 2016 election, the hack-and-leak operation targeting the DNC “arguably had the most impact.”

Why? Because the media picked up that material and amplified it.

Shafer of Politico argues, however, that that was totally justified because there was “politically actionable news” in those leaks, including how the Democratic Party was sabotaging Bernie Sanders, Chelsea Clinton’s feuding with Doug Band (Clinton called out Band, a longtime Clinton advisor, for his conflicts of interests involving the Clinton Foundation and his private consulting firm) , and Donna Brazile’s leaking of CNN town hall questions to Hillary Clinton.

“It’s demonstrably true that there is fascinating news about how the political process works contained in those emails and the fact that the Russians may have been behind it does not make that news off-limits,” says Shafer. “Just because the story might make the Kremlin smile does not mean that journalists should suppress it.”

Some argue that the release of the hacked documents, while newsworthy, appeared designed to distract voters’ attention from important campaign issues or unfavorable press coverage. For example, why did Wikileaks release the first trove of Podesta emails hacked by the Russians within an hour of The Washington Post’s publishing of the “Access Hollywood” recording that revealed Trump’s lewd talk about forcing himself on women?

It would be unrealistic to expect journalists not to cover newsworthy leaks just because they were brought to light through an information operation

Podesta and others in the Clinton camp argued that context mattered more than the emails.

Even Nimmo acknowledges it would be unrealistic to expect journalists not to cover newsworthy leaks just because they were brought to light through an information operation. But as a general principle, he says, the context is equally as crucial. “Once the leak is out there, the first questions are whether it’s genuine, and whether the content is actually newsworthy. If the answer is ‘Yes’ to both, you’re caught between the content and the context,” says Nimmo. “The only thing you can fairly do as a journalist is give equal weight to both.”

It’s a dilemma American journalists may increasingly face heading into the November election. With social media platforms cracking down on networks of fake accounts like the ones Russia’s IRA used in 2016, Nimmo and other disinformation experts say it’s likely that information operations will shift their efforts more toward direct outreach to journalists. Are newsrooms ready for the next iteration of Guccifer?

“If a Guccifer 3.0 pops up, Facebook and Twitter are going to go after it hard,” says Schafer of the Alliance for Securing Democracy. But, he adds, “I don’t think we’re collectively in a much better place in 2020 than we were in 2015-16 with the hack-and-leak problem.”

He points to media reports that Russia’s GRU had hacked into Burisma, the Ukrainian energy company that figured prominently in President Trump’s impeachment trial. The hacks began in early November 2019, when Joe Biden was facing scrutiny from the Trump administration and others for his son’s position on the company’s board from 2014-19. While no leaks have materialized so far, hackers stole email credentials through a phishing campaign, which media reports speculated could have given the hackers access to information about the Bidens.

Attribution.news, the joint project of the Stanford Internet Observatory and First Draft, critiques coverage of the Burisma hack in one of its case studies, pointing out that the media relied on a thinly-sourced attribution report from an anti-phishing security company to assert Russian involvement, and warns that without proper vetting journalists can become “accomplices of disinformation.”

Attribution.news recommends that in such cases journalists should specify the confidence level of those claiming to have found a hacking operation; not rely on circumstantial evidence, such as politically significant timing, as proof of attribution; ask an expert in technical digital forensics to read the report detailing such a hack and assess the credibility of the entity that produced it; and consider whether coverage of the alleged hack would benefit the perpetrator.

“Media face a huge challenge; they are a target, because people rely on them to make sense of these situations,” DiResta explains. “Less scrupulous, hyper-partisan publications will rush out stories based on the leaked docs without fully vetting them or discussing their origin, which means the public will have a particular perception before the time-consuming work to contextualize what’s real and what actually happened is done.”

In some ways, Covid-19 has offered an opportunity for journalists to test their new skills in uncovering and reporting on disinformation — a dress rehearsal of sorts for November. But many cash-strapped newsrooms haven’t been able to invest in such efforts — and even for those who have, new curve balls could come as the election draws near.

Are newsrooms ready for the next iteration of Guccifer?

“For the news organizations that haven’t really been able to afford to follow this over the past few years, it is going to be like sprinting uphill to get a handle on election disinformation during the moment where everyone’s focused on Covid-19,” says Joan Donovan, coauthor of the 2019 Data & Society report and research director of the Shorenstein Center on Media, Politics and Public Policy at Harvard Kennedy School.

She foresees significant disinformation around the issue of mail-in ballots, which will be more difficult to cover because of social distancing requirements. And even if the election goes smoothly without any such interference, there’s still a strong potential for undermining the election’s integrity through online channels.

“You won’t actually need evidence of mail-in voter fraud to make the claim appear as true if you can get enough networks of social media to believe it,” says Donovan, citing President Trump’s false claims that Michigan was “illegally” mailing ballots to 7.7 million residents, opening the way for widespread voter fraud. (In fact, Michigan mailed its 7.7 million registered voters paperwork to apply for absentee ballots.)

A key defensive measure, she says, is proactive communication from both journalists and public officials to educate voters about revised voting protocols and establish trust in those authoritative channels — inoculating voters before they’re exposed to swirling rumors or an 11th-hour disinformation campaign.

As for vetting leaker offering potentially hacked documents, journalists should begin with some key questions, Stubbs says: Who are you? What is your agenda? How can I trust this information?

If you can’t answer those questions, that should give you pause, says Stubbs, who responded to an interview request for this article by asking for confirmation of the writer’s identity, and a DM from her verified Twitter account.

But, he adds, “If you go through the due diligence of trying to answer those questions, you may end up with a bigger story.”

Just ask Frenkel.